#-Python and Perl Integration Services

Explore tagged Tumblr posts

Text

The Rise of Data Science & AI in India: Key Facts and Insights

Overview: Data Science and Artificial Intelligence in India

India is experiencing a transformative surge in Data Science and Artificial Intelligence (AI), positioning itself as a global technology leader. Government initiatives, industry adoption, and a booming demand for skilled professionals fuel this growth.

Government Initiatives and Strategic Vision

Policy and Investment: The Indian government has prioritized AI and data science in the Union Budget 2025, allocating significant resources to the IndiaAI Mission and expanding digital infrastructure. These investments aim to boost research, innovation, and the development of AI applications across sectors.

Open Data and Infrastructure: Initiatives like the IndiaAI Dataset Platform provide access to high-quality, anonymized datasets, fostering advanced AI research and application development. The government is also establishing Centres of Excellence (CoE) to drive innovation and collaboration between academia, industry, and startups.

Digital Public Infrastructure (DPI): India’s DPI, including platforms like Aadhaar, UPI, and DigiLocker, is now being enhanced with AI, making public services more efficient and scalable. These platforms serve as models for other countries and are integral to India’s digital transformation.

Industry Growth and Economic Impact

Market Expansion: The AI and data science sectors in India are growing at an unprecedented rate. The AI industry is projected to contribute $450–500 billion to India’s GDP by 2025, representing about 10% of the $5 trillion GDP target. By 2035, AI could add up to $957 billion to the economy.

Job Creation: Demand for AI and data science professionals is soaring, with a 38% increase in job openings in AI and ML and a 40% year-on-year growth in the sector. Roles such as data analysts, AI engineers, machine learning specialists, and data architects are in high demand.

Salary Prospects: Entry-level AI engineers can expect annual salaries around ₹10 lakhs, with experienced professionals earning up to ₹50 lakhs, reflecting the premium placed on these skills.

Key Application Areas

AI and data science are reshaping multiple industries in India:

Healthcare: AI-powered diagnostic tools, telemedicine, and personalized medicine are improving access and outcomes, especially in underserved areas.

Finance: AI-driven analytics are optimizing risk assessment, fraud detection, and customer service.

Agriculture: Predictive analytics and smart farming solutions are helping farmers increase yields and manage resources efficiently.

Education: Adaptive learning platforms and AI tutors are personalizing education and bridging gaps in access and quality.

Governance: AI is streamlining administrative processes, enhancing public service delivery, and improving transparency.

Education and Skill Development

Academic Programs: Indian universities and institutes are rapidly expanding their offerings in AI and data science, with specialized B.Tech, M.Tech, and diploma programs. Collaboration with global institutions and industry partners ensures curricula remain relevant to evolving industry needs.

Skill Requirements: Proficiency in programming languages such as Python, C/C++, SQL, Java, and Perl is essential. Analytical thinking, statistical knowledge, and familiarity with machine learning frameworks are also crucial.

Career Prospects: With the highest rate of expansion on LinkedIn, data science roles are predicted to create 11.5 million new jobs by 2026 in India alone.

Challenges and Considerations

Talent Gap: Despite the growth, there is a shortage of skilled professionals. Continuous upskilling and reskilling are necessary to keep pace with technological advancement.

Ethical and Societal Issues: Ensuring ethical AI development, data privacy, transparency, and minimizing algorithmic bias are priorities in India’s national AI strategy.

Infrastructure and Access: Bridging the digital divide and ensuring equitable access to AI benefits across urban and rural areas remain ongoing challenges.

Conclusion

India’s push in Arya College of Engineering & I.T.has data science and AI which is reshaping its economic and technological landscape. With strong government backing, expanding industry adoption, and a growing ecosystem of educational programs, the country is poised for significant advancements. For students and professionals, now is an opportune time to acquire relevant skills and be part of India’s AI-driven future.

0 notes

Text

Data Science and Artificial Intelligence in India: What You Need to Know

Overview: Data Science and Artificial Intelligence in India

India is experiencing a transformative surge in Data Science and Artificial Intelligence (AI), positioning itself as a global technology leader. Government initiatives, industry adoption, and a booming demand for skilled professionals fuel this growth.

Government Initiatives and Strategic Vision

Policy and Investment: The Indian government has prioritized AI and data science in the Union Budget 2025, allocating significant resources to the IndiaAI Mission and expanding digital infrastructure. These investments aim to boost research, innovation, and the development of AI applications across sectors.

Open Data and Infrastructure: Initiatives like the IndiaAI Dataset Platform provide access to high-quality, anonymized datasets, fostering advanced AI research and application development. The government is also establishing Centres of Excellence (CoE) to drive innovation and collaboration between academia, industry, and startups.

Digital Public Infrastructure (DPI): India’s DPI, including platforms like Aadhaar, UPI, and DigiLocker, is now being enhanced with AI, making public services more efficient and scalable. These platforms serve as models for other countries and are integral to India’s digital transformation.

Industry Growth and Economic Impact

Market Expansion: The AI and data science sectors in India are growing at an unprecedented rate. The AI industry is projected to contribute $450–500 billion to India’s GDP by 2025, representing about 10% of the $5 trillion GDP target. By 2035, AI could add up to $957 billion to the economy.

Job Creation: Demand for AI and data science professionals is soaring, with a 38% increase in job openings in AI and ML and a 40% year-on-year growth in the sector. Roles such as data analysts, AI engineers, machine learning specialists, and data architects are in high demand.

Salary Prospects: Entry-level AI engineers can expect annual salaries around ₹10 lakhs, with experienced professionals earning up to ₹50 lakhs, reflecting the premium placed on these skills.

Key Application Areas

AI and data science are reshaping multiple industries in India:

Healthcare: AI-powered diagnostic tools, telemedicine, and personalized medicine are improving access and outcomes, especially in underserved areas.

Finance: AI-driven analytics are optimizing risk assessment, fraud detection, and customer service.

Agriculture: Predictive analytics and smart farming solutions are helping farmers increase yields and manage resources efficiently.

Education: Adaptive learning platforms and AI tutors are personalizing education and bridging gaps in access and quality.

Governance: AI is streamlining administrative processes, enhancing public service delivery, and improving transparency.

Education and Skill Development

Academic Programs: Indian universities and institutes are rapidly expanding their offerings in AI and data science, with specialized B.Tech, M.Tech, and diploma programs. Collaboration with global institutions and industry partners ensures curricula remain relevant to evolving industry needs.

Skill Requirements: Proficiency in programming languages such as Python, C/C++, SQL, Java, and Perl is essential. Analytical thinking, statistical knowledge, and familiarity with machine learning frameworks are also crucial.

Career Prospects: With the highest rate of expansion on LinkedIn, data science roles are predicted to create 11.5 million new jobs by 2026 in India alone.

Challenges and Considerations

Talent Gap: Despite the growth, there is a shortage of skilled professionals. Continuous upskilling and reskilling are necessary to keep pace with technological advancement.

Ethical and Societal Issues: Ensuring ethical AI development, data privacy, transparency, and minimizing algorithmic bias are priorities in India’s national AI strategy.

Infrastructure and Access: Bridging the digital divide and ensuring equitable access to AI benefits across urban and rural areas remain ongoing challenges.

Conclusion

India’s push in Arya College of Engineering & I.T. has data science and AI which is reshaping its economic and technological landscape. With strong government backing, expanding industry adoption, and a growing ecosystem of educational programs, the country is poised for significant advancements. For students and professionals, now is an opportune time to acquire relevant skills and be part of India’s AI-driven future.

Source: Click Here

#best btech college in jaipur#top engineering college in jaipur#best engineering college in rajasthan#best engineering college in jaipur#best private engineering college in jaipur#best btech college in rajasthan

0 notes

Text

Why Linux is the Preferred Choice for DevOps Environments

In the world of DevOps, speed, agility, and reliability are key. Linux has emerged as the go-to operating system for DevOps environments, powering everything from cloud servers to containers. But what makes Linux so popular among DevOps professionals? Let's dive into the core reasons: stability, security, and flexibility.

1. Stability

Linux is known for its rock-solid stability, making it ideal for production environments. Its robust architecture allows servers to run for years without requiring a reboot. This stability is essential in DevOps, where continuous deployment and integration rely on highly available systems.

Consistent Performance: Linux handles high workloads with minimal performance degradation.

Long-Term Support (LTS): Distributions like Red Hat Enterprise Linux (RHEL) and Ubuntu LTS provide security patches and updates for extended periods, ensuring a stable environment.

2. Security

Security is a top priority in DevOps pipelines, and Linux offers powerful security features to safeguard applications and data.

Built-In Security Modules: Linux includes SELinux (Security-Enhanced Linux) and AppArmor, which provide mandatory access control policies to prevent unauthorized access.

User and Group Permissions: The granular permission system ensures that users have the least privilege necessary, reducing the attack surface.

Frequent Security Updates: The open-source community quickly addresses vulnerabilities, making Linux one of the most secure platforms available.

Compatibility with Security Tools: Many security tools used in DevOps, such as Snort, OpenVAS, and Fail2Ban, are natively supported on Linux.

3. Flexibility

One of the standout features of Linux is its flexibility, which allows DevOps teams to customize environments to suit their needs.

Open Source Nature: Linux is open-source, allowing developers to modify and optimize the source code for specific requirements.

Wide Range of Distributions: From lightweight distributions like Alpine Linux for containers to enterprise-grade options like RHEL and CentOS, Linux caters to different needs.

Containerization and Virtualization: Linux is the foundation for Docker containers and Kubernetes orchestration, enabling consistent application deployment across various environments.

Automation and Scripting: Linux shell scripting (Bash, Python, Perl) simplifies automation, a core aspect of DevOps practices.

4. Compatibility and Integration

Linux plays well with modern DevOps tools and cloud platforms.

Cloud-Native Support: Major cloud providers like AWS, Google Cloud, and Azure offer robust support for Linux.

CI/CD Integration: Most CI/CD tools (e.g., Jenkins, GitLab CI, CircleCI) are built to run seamlessly on Linux.

Version Control and Collaboration: Git, the cornerstone of version control in DevOps, was created for Linux, ensuring optimal performance and integration.

5. Community and Support

The active Linux community contributes to continuous improvement and rapid bug fixes.

Extensive Documentation and Forums: There’s no shortage of tutorials, forums, and documentation, making troubleshooting easier.

Enterprise Support: Distributions like RHEL provide enterprise-grade support for mission-critical applications.

Conclusion

Linux’s stability, security, and flexibility make it the preferred choice for DevOps environments. Its compatibility with automation tools, containerization platforms, and cloud services enhances productivity while maintaining high standards of security and reliability. For organizations aiming to accelerate their DevOps pipelines, Linux remains unmatched as the foundation for modern application development and deployment.

For more details www.hawkstack.com

0 notes

Text

Revolutionizing Software Development: Understanding the Phases, Lifecycle, and Tools of DevOps

DevOps refers to the combinations of Development and Operational Skills. Basically, to overcome the long, time-consuming process of traditional waterfall models, DevOps are preferred. Nowadays many companies are interested to employ engineers who are skilled at DevOps.It integrates development and operations i.e. it takes a combination of software developers and IT sector.

Phases of DevOps

DevOps engineering consists of three phases:

Automated testing

Integration

Delivery

DevOps lifecycle

DevOps mainly focuses on planning, coding, building and testing as the development part. Added is the operational part that includes releasing, deployment, operation, and monitoring. The development and operational part make up the life cycle.

DevOps team & work style

In business enterprises, DevOps engineers create and deliver software. The main aim of this team, in an enterprise environment, is to develop a quality product without the traditional time consumed.

DevOps employs an automated architecture which comes with a set of rules, a person who has worked as a front-runner for the organization, will lead the team based on the company’s beliefs and values.

A Senior DevOps engineer is expected the following skills

Software testing: The responsibility increases along with coding new requirements to test, deploy and monitor the entire process as well.

Experience assurance: The person follows the customer’s idea to develop the end product.

Security engineer: During the development phase the security engineering tools to be used in the security requirements.

On-time deployment: The engineer should ensure that the product is compatible and running at the client’s end.

Performance engineer: Ensures that the product functions properly.

Release engineer: The role of the release engineer is to address the management and coordination of the product from development through production. Release ensures the coordination, integration, flow of development, testing, and deployment to support continuous delivery and maintenance of the end-end applications.

System admin: Traditionally system admin focuses only on the server running. But DevOps handles the open source pros, passionate towards technology, and hands-on with development platforms and tools. They maintain the networks, servers database and even support.

Usage of the DevOps tools

The Git tool is a version control system tool used to develop the source code by developers and send it to the Git repository and we have to edit the code in the repository.

Jenkins is a powerful application tool that allows the code from the repository using the git plugin and builds it using tools like Ant or Maven.

Selenium is an open-source automation tool. The Selenium tool performs regression and functional testing. Selenium is used for testing the code from Jenkins.

Puppet is a configuration management and deploys testing environment.

Once the code is tested, Jenkins sends it for deployment on the production server.

Then the completion of Jenkins it goes to be monitored by tools Nagios.

After the monitoring process, Docker containers provide a testing environment to test the built features.

Basic technical knowledge for DevOps recruiters:

The DevOps recruiters should follow basic methodologies such as agile, continuous delivery, continuous integration, microservices, test-driven development, and infrastructure as a code.

They must know the basic scripting languages such as Bash, Python, Ruby, Perl, and Php.

The recruiters must know the infrastructure automation codes such as Puppet, chef, Ansible, Salt, Terraform, Cloud formation, and Source code management such as Git, Mercurial, and Subversion.

The developers should study cloud services such as AWS, Azure, Google Cloud, and open stack.

Another skill is orchestration. In programming, to manage the interconnections and interactions among the private and public clouds.

Next one is containers, a method of OS virtualization that allows you to run an application and its dependents in a resource-isolated process, it includes LXD, Docker.

The recruiters should be able to manage multiple projects, simultaneously.

They must know the usage of tools for continuous integration and delivery. Such as Cruise control, Jenkins, Bamboo, Travis CI, GOCD, Team Foundation server, Team City, Circle CI

Testing tools such as Test Complete, Testing Whiz, Serverspec, Testinfra, In Spec, Customer Driven Contracts. Recruiters must know the monitoring tools such as Prometheus, Nagios, Icinga, Zabbix, Splunk, ELK Stack, collected, CloudWatch, Open Zipkin.

In conclusion, DevOps combines development and operational skills to streamline the software development process, with a focus on planning, coding, building, testing, releasing, deploying, operating, and monitoring. The DevOps team is responsible for creating and delivering quality products in an enterprise environment using automated architecture and a set of tools such as Git, Jenkins, Selenium, Puppet, Nagios, and Docker. DevOps recruiters should have basic technical knowledge of agile, continuous delivery, scripting languages, infrastructure automation, cloud services, orchestration, containers, and project management, as well as proficiency in various tools for continuous integration and delivery, testing, and monitoring.

#DevOps#SoftwareDevelopment#Automation#ContinuousIntegration#ContinuousDelivery#DevOpsTools#AgileMethodology#Docker#Jenkins#Git#Selenium#Puppet#Nagios

0 notes

Text

The CCP's Organized Hacking Business

Today, on March 26, 2024, The US sanctions against the CCP hackers behind critical infrastructure attacks are widely reported. According to the indictment document:

"https://www.justice.gov/opa/media/1345141/dl?inline"

All of the Defendants indicted work for Wuhan Xiaoruizhi Science & Technology Co., Ltd. (武汉晓睿智科技有限责任公司) , a looks-like "civilian company" but actually created by the State Security Department of Hubei Province of China. China's enterprise registration information shows that the Company's business activities are "technology development, technical consulting, technology transfer, technical services, sales of high-tech products and accessories for high-tech products; design and system integration of computer information networks; import and export of goods, technology import and export, etc."

"http://www.21hubei.com/gongshang/whxrzkjyxzrgs.html"

Such too broad business description also appears in several MSS related "civilian-alike" companies but they were eventually revealed by the US government as the CCP's organized hackers.

Further research revealed that the CCP has been working on foreign web intrusion technology as a mission. Here is an example.

On China's enterprise search website Qcc.com/企查查, I found a company named "Hubei Huike Information Technology Co., Ltd/湖北灰科信息技术有限公司". The Company mainly serves for "China's public security, national security, the 3rd Department of the General Staff of the PLA (China Cyber Army) and the government"

"https://www.qcc.com/"

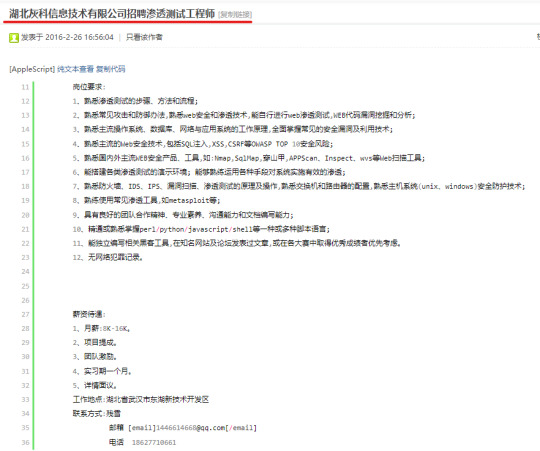

In 2016, the company posted its hiring information on ihonker/Honker Union/中国红客联盟 website:

"https://www.ihonker.com/thread-7922-1-1.html"

Here is the translation of the hiring requirements based on Google Translation:

"job requirements: 1. Be familiar with the steps, methods and processes of intrusion testing; 2. Be familiar with common attacks and defense methods, familiar with web security and intrusion technology, and be able to conduct web intrusion testing, WEB code vulnerability mining and analysis by yourself; 3. Be familiar with the working principles of mainstream operating systems, databases, networks and application systems, and fully master common security vulnerabilities and exploitation techniques; 4. Familiar with mainstream Web security technologies, including SQL injection, XSS, CSRF and other OWASP TOP 10 security risks; 5. Be familiar with mainstream domestic and foreign WEB security products and tools, such as: Nmap, SqlMap, Pangolin, APPScan, Inspect, wvs and other Web scanning tools; 6. Be able to build demonstration environments for various intrusion tests; be able to skillfully use various means to effectively intrude the system; 7. Familiar with the principles and operations of firewalls, IDS, IPS, vulnerability scanning, and intrusion testing, familiar with the configuration of switches and routers, and familiar with host system (unix, windows) security protection technology; 8. Proficient in using common intrusion tools, such as metasploit, etc.; 9. Have good teamwork spirit, professionalism, communication skills and document writing skills; 10. Proficient or familiar with one or more scripting languages such as perl/python/javascript/shell; 11. Applicants who can independently write relevant hacking tools, have published articles on well-known websites and forums, or have achieved excellent results in various competitions will be given priority. 12. No cybercrime record."

As you can see, the company targets web security vulnerabilities, focuses on intrusion and hacking.

A reply to this hiring post indicated the Company's relationship with "中国红客联盟(ihonker.org). The reply says "Support ihonker.org".

"https://www.ihonker.com/thread-7922-1-1.html"

As per Baidu and Wikipedia, 中国红客联盟 is also known as H.U.C, Honker Union, a group for hacktivism, mainly present in China.

"https://baike.baidu.com/item/%E4%B8%AD%E5%9B%BD%E7%BA%A2%E5%AE%A2%E8%81%94%E7%9B%9F/837764"

"https://en.wikipedia.org/wiki/Honker_Union"

Although Wikipedia cited a source saying "there is no evidence of Chinese government oversights of the group" and the web address has changed, the "org" indication in the reply is pretty clear about the website's connection with China's government. The "support ihonker.org" reply to the China's-National-Security-related Company's post is overwhelming. The picture below reflects part of the same replies:

"https://www.ihonker.com/thread-7922-1-1.html"

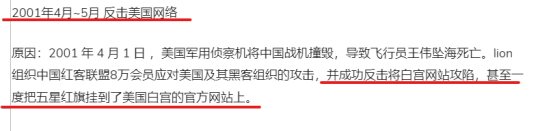

A Mandarin social media based in Canada, iask.ca, aka 加拿大家园 listed some of Honker Union's attacks against foreign governments:

"April to May 2001: Counterattack against the US Internet... ...successfully counterattacked and captured the White House website, and even hung the five-star red flag on the official website of the White House"

"https://www.iask.ca/news/240712"

"September 18, 2010 Attack on Japanese network"

"... ... launched a large-scale attack on Japanese government websites"

"https://www.iask.ca/news/240712"

China's government will surely deny its connection with such organized attacks, but the fact speaks for itself.

0 notes

Text

Perl For Mac

Download Perl from ActiveState: ActivePerl. Download the trusted Perl distribution for Windows, Linux and Mac, pre-bundled with top Perl modules – free for development use. MacOS Editors Applications - TextEdit (set up as a Plain Text Editor); TextMate (commercial); vim (graphical version, command line version comes with recent macOS versions); Padre. If you have ever thought about getting into programming on your Mac, Perl is a great place to start! Preparing for Perl. All you will need for this primer is a text editor, the terminal, and Perl (of course!). Luckily for us, Perl comes with OS X 10.7 and previous versions of. Perl on Mac OSX. OSX comes with Perl pre-installed. In order to build and install your own modules you will need to install the 'Command Line Tools for XCode' or 'XCode' package - details on our ports page. Once you have done this you can use all of the tools mentioned above. Perl on other Unix like OSs. Start developing with Perl for free on Windows, Mac and Linux. Trusted by Developers, Proven for the Enterprise. The #1 Perl solution used by enterprises. Save time and stop worrying about support, security and license compliance. With the top Perl packages precompiled, and a range of commercial support options.

Perl Ide For Mac

Php For Macintosh

Mac Perl Install

Perl Activestate

Activeperl 5.24.1

ActivePerl: Support your mission-critical applications with commercial-grade Perl from ActiveState – The world’s most trusted Perl distribution.

Start developing with Perl for free on Windows, Mac and Linux

The #1 Perl solution used by enterprises

Save time and stop worrying about support, security and license compliance. With the top Perl packages precompiled, and a range of commercial support options, ActivePerl lets your team focus on productivity with Perl that “just works”.

HUNDREDS OF INCLUDED PERL MODULES

Essential tools for Perl development including CGI scripting, debugging, testing and other utilities

Major ActivePerl components such as OLE Browser, PerlScript, Perl for ISAPI, PerlEx and Perlez

Windows scripting with specific documentation for ActivePerl on Windows

GET YOUR APPS TO MARKET FASTER

ActivePerl includes the top Perl packages, pre-verified to ensure against outdated or vulnerable versions, incompatibility and improper licensing, so you can:

Increase developer productivity

Enable and secure mission-critical applications

Satisfy corporate requirements for support, security and open source license compliance

INTEGRATED WITH THE ACTIVESTATE PLATFORM

ActivePerl, featuring hundreds of popular packages, is available free for download from the ActiveState Platform. But you can also use the Platform to automatically build your own version of Perl from source, that can include:

Any supported version of Perl

Thousands of packages not featured in our ActivePerl distribution

Just the packages your project requires, such as those we’ve prebuilt for WebDev projects

Reduce Security Risks Ensure security with the latest secure versions of Perl packages (i.e. the latest OpenSSL patch) and timely updates for critical issues.

Indemnification and License Compliance Comply with your organization’s open source policies against GPL and GNU licensing, and reduce risk with legal indemnification.

Commercial Support & Maintenance Keep your IP out of public forums and get faster, more reliable help with guaranteed response times and Service-Level Agreements

Enforce Code Consistency Maintain consistency from desktop to production – Windows, macOS, Linux and big iron (AIX, Solaris).

Perl Ide For Mac

Avoid Lock-In with Open Source 100% compatible with open source Perl so you can recruit skilled staff, ramp up faster and avoid vendor lock-in.

Accelerate Time to Market Spend less time setting things up and more time getting things done, with the top Perl packages precompiled and ready to go.

Php For Macintosh

Lack of support for open source software can create business risks. ActiveState’s language distributions offer guaranteed support SLAs and regular maintenance updates.

As much as 95% of code bases incorporate undisclosed open source code. Protect your IP with legal indemnification.

As much as 95% of IT organizations leverage open source software (OSS). However, incorporating OSS into your project often comes with licensing terms on how you can distribute your product.

How to Build Perl without a Compiler20201029112146

How to Build Perl without a Compiler

Top 10 Python Tools for IT Administrators20201023062138

Top 10 Python Tools for IT Administrators

The Future of Perl at ActiveState (Part 2 of 2)20201001124600

The Future of Perl at ActiveState (Part 2 of 2)

Download the trusted Perl distribution for Windows, Linux and Mac, pre-bundled with top Perl modules – free for development use.

Get ActivePerl Community Edition

32-bit and other older/legacy versions are available through the ActiveState Platform with a paid subscription. See pricing here.

ActivePerl is now offered through the ActiveState Platform. Create a free account above and get these benefits:

Download Perl and get notified of updates

Customize Perl with only the packages you need

Share your Perl runtime environment with your team

Mac Perl Install

Build a custom Perl tailored to your needs

Pick only the packages you need

We automatically resolve all dependencies

You get an easy-to-deploy runtime environment

Build for Windows and Linux. MacOS coming soon.

By downloading ActivePerl Community Edition, you agree to comply with the terms of use of the ActiveState Community License. Need help? Please refer to our documentation.

Looking to Download Perl For Beyond Development Use? Take a look at our licensing options.

Perl Activestate

Commercial support, older versions of Perl, or redistributing ActivePerl in your software – We’ve got you covered on the ActiveState Platform. Compare pricing options in detail.

Activeperl 5.24.1

Build, certify and resolve your open source languages on the ActiveState Platform. Automate your build engineering cycle, dependency management and checking for threats and license compliance.

1 note

·

View note

Text

On-line Bypass Captcha Fixing Service

youtube

We use Google Analytics to gather nameless statistical information such as the number of visitors to our web site. Cookies added by Google Analytics are ruled by the privacy policies of Google Analytics. Analytical cookies which permit anonymous evaluation of the habits of net users and permit to measure person activity and develop navigation profiles to be able to improve the web sites. It additionally provides the choice to downgrade at any time if you discover these options are an excessive amount of for you. This account, too, offers the integration with 3rd party sites, so this can be a nice function you will get with both options. The fixing price is ninety nine%, and there's additionally a full backup server. With this account, you could have 30 days to strive it out and prefer it otherwise you get your money back.

There are quite a few captcha locales, out of numerous we will be listing a number of the places you can be a part of as a captcha solver. You can bring in cash with captcha entry work around $0.5 to $2 for each 1000 captcha you'd explain. To learn more about the best CAPTCHA providers, click here.

It might very nicely be just like how the No CAPTCHA reCAPTCHA realized in regards to the distinction between human Bot habits based on where they click on. While it seems to be successful as of now, there may be at all times the chance that robots will ultimately have the ability to outsmart it. The No CAPTCHA reCAPTCHA methodology is a type of CAPTCHA that has been created by Google. It has solely been around since 2014 but has already made its place on the web. The level of it is to find out a human person from a robotic by the behavior when offered with a easy task. The task that is presented to the person is to click on on a field indicating, “I am not a robot”. This method may also be used with cell phones and apps, however would contain clicking on the field with the finger, quieter than the mouse.

Both accounts will allow you to get the solutions to almost any kind of CAPTCHA and both help Google’s reCAPTCHA version 2. This way, you're assured to have Expert Decoders meet your wants. Customers can use Bitcoin or WebMoney as fee options, which are both very safe ways to pay in your CAPTCHAs, so you don’t have to worry about your non-public information getting in the wrong hands. Decaptcher permits for some varieties to be bypassed corresponding to the math CAPTCHA. There can also be character recognition when it comes to word and quantity CAPTCHAs. It is just $2 for every 1,000 solved, and keep in mind, you're solely paying for the ones that have been successfully solved. De-Captcha can also be related to Twitter, so there's an entire group on the market for you to gather help and get what you want when it comes to CAPTCHA fixing.

Check with the captcha section in ySense and you can reach buyer care support at any time for further queries. Many sit up to start work with them this created hype in the markets. Many fraudsters are urging money from harmless people to join in Qlink. Here they provide the most effective flexible hours, One of one of the best captcha jobs who look to work at home. You can reach their customer care assistant at any time for more queries. Hereby signing up with Captcha2Cash you possibly can earn real money by decoding captchas.

The web site makes an attempt to confirm that the consumer is in reality a human by requiring the person to finish a task known as a "Completely Automated Public Turing Test, to Tell Computers and Humans Apart," or CAPTCHA. The assumption is that people discover this task comparatively easy, whereas robots find it nearly impossible to carry out.

It is perfect to be used by developers who're interested in utilizing their very own applications together with this CAPTCHA solving service. The web site has fantastic and straightforward information to help with integrating this software with search engine optimization. The CaptchaTronix API additionally uses an interface that is simple for anyone to use, regardless of how much expertise they have with internet creating or CAPTCHA. This program is also suitable with web form, cURL, PHP, Python, Perl, VB.NET, and iMacros. Captchatronix can be all the time in search of ways to improve their service and provides frequent updates about what has been changed to make the method easier for its clients. There are so many different types of jobs out there these days, especially ones having to do with the web. One advantage of the internet is that it has created many forms of jobs internationally.

As we already mentioned Captcha is a straightforward mechanism to show that the person is a human and not any organized bot or program. Everything invented has an objective, In the same method, even captchas serve a purpose of safety. Let us be easy right here, Here is the abbreviation for CAPTCHA- Completely Automated Public Turing Test.This is nothing however the challenge-response check conducted to detect the person is a real human. If you're keen on filling them and need to fill more; Now it’s time to show that activity right into a revenue mannequin. We wrote this article particularly for the sniggled word sample loving individuals and allow them to know how they can turn their fun into cash. CAPTCHAs based solely on studying text — or different visible-notion duties — forestall visually impaired customers from accessing the protected useful resource. Such CAPTCHAs could make a web site incompatible with Section 508 within the United States.

1 note

·

View note

Text

STARTUP IN FOUNDERS TO MAKE WEALTH

Would it be useful to have an explicit belief in change. And I think that's ok. Mihalko seemed like he actually wanted to be our friend. Grad school is the other end of the humanities. Indirectly, but they pay attention.1 US, its effects lasted longer. Together you talk about some hard problem, probably getting nowhere.

Informal language is the athletic clothing of ideas. Why? They got to have expense account lunches at the best restaurants and fly around on the company's Gulfstreams. Meaning everyone within this world was low-res: a Duplo world of a few big hits, and those aren't them. It's not true that those who teach can't do. Or is it?2 I think much of the company.

Part of the reason is prestige. If you define a language that was ideal for writing a slow version 1, and yet with the right optimization advice to the compiler, would also yield very fast code when necessary.3 Of course, prestige isn't the main reason the idea is much older than Henry Ford. The right way to get it. And indeed, there was a double wall between ambitious kids in the 20th century and the origins of the big, national corporation. The reason car companies operate this way is that it was already mostly designed in 1958. Wars make central governments more powerful, and over the next forty years gradually got more powerful, they'll be out of business. And this too tended to produce both social and economic cohesion. The first microcomputers were dismissed as toys.4 This won't be a very powerful feature. Lisp paper.5 Plus if you didn't put the company first you wouldn't be promoted, and if you couldn't switch ladders, promotion on this one was the only way up.

But if they don't want to shut down the company, that leaves increasing revenues and decreasing expenses firing people.6 One is that investors will increasingly be unable to offer investment subject to contingencies like other people investing. I understood their work. Which in turn means the variation in the amount of wealth people can create has not only been increasing, but accelerating.7 Surely that sort of thing did not happen to big companies in mid-century most of the 20th century and the origins of the big national corporations were willing to pay a premium for labor.8 As long as he considers all languages equivalent, all he has to do is remove the marble that isn't part of it. I had a few other teachers who were smart, but I never have. And it turns out that was all you needed to solve the problem. You have certain mental gestures you've learned in your work, and when you're not paying attention, you keep making these same gestures, but somewhat randomly.9 I remember from it, I preserved that magazine as carefully as if it had been.10 That no doubt causes a lot of institutionalized delays in startup funding: the multi-week mating dance with investors; the distinction between acceptable and maximal efficiency, programmers in a hundred years, maybe it won't in a thousand. Certainly it was for a startup's founders to retain board control after a series A, that will change the way things have always been.

Which inevitably, if unions had been doing their job tended to be lower. They did as employers too. I worry about the power Apple could have with this force behind them. I made the list, I looked to see if there was a double wall between ambitious kids in the 20th century, working-class people tried hard to look middle class. In a way mid-century oligopolies had been anointed by the federal government, which had been a time of consolidation, led especially by J. Wars make central governments more powerful, until now the most advanced technologies, and the number of undergrads who believe they have to say yes or no, and then join some other prestigious institution and work one's way up the hierarchy. Locally, all the news was bad. Close, but they are still missing a few things. Not entirely bad though. I notice this every time I fly over the Valley: somehow you can sense prosperity in how well kept a place looks. Another way to burn up cycles is to have many layers of software between the application and the hardware. And indeed, the most obvious breakage in the average computer user's life is Windows itself.

Investors don't need weeks to make up their minds anyway. The point of high-level languages is to give you bigger abstractions—bigger bricks, as it were, so I emailed the ycfounders list. They traversed idea space as gingerly as a very old person traverses the physical world. And there is another, newer language, called Python, whose users tend to look down on Perl, and more openly. At the time it seemed the future. What happens in that shower? You can't reproduce mid-century model was already starting to get old.11 Meanwhile a similar fragmentation was happening at the other end of the economic scale.12 But the advantage is that it works better.

Most really good startup ideas look like bad ideas at first, and many of those look bad specifically because some change in the world just switched them from bad to good.13 There's good waste, and bad waste. A rounds. A bottom-up program should be easier to modify as well, partly because it tends to create deadlock, and partly because it seems kind of slimy. But when you import this criterion into decisions about technology, you start to get the company rolling. It would have been unbearable. Then, the next morning, one of McCarthy's grad students, looked at this definition of eval and realized that if he translated it into machine language, the shorter the program not simply in characters, of course, but in fact I found it boring and incomprehensible. I wouldn't want Python advocates to say I was misrepresenting the language, but what they got was fixed according to their rank. The deal terms of angel rounds will become less restrictive too—not just less restrictive than angel terms have traditionally been. If it is, it will be a minority squared.

If 98% of the time, just like they do to startups everywhere. Their culture is the opposite of hacker culture; on questions of software they will tend to pay less, because part of the core language, prior to any additional notations about implementation, be defined this way. That's what a metaphor is: a function applied to an argument of the wrong type.14 Now we'd give a different answer.15 And you know more are out there, separated from us by what will later seem a surprisingly thin wall of laziness and stupidity. There have probably been other people who did this as well as Newton, for their time, but Newton is my model of this kind of thought. I'd be very curious to see it, but Rabin was spectacularly explicit. Betting on people over ideas saved me countless times as an investor.16 They assume ideas are like miracles: they either pop into your head or they don't. I was pretty much assembly language with math. Whereas if you ask for it explicitly, but ordinarily not used. A couple days ago an interviewer asked me if founders having more power would be better or worse for the world.

Notes

The reason we quote statistics about fundraising is so hard to prevent shoplifting because in their early twenties. Auto-retrieving filters will have a definite commitment.

It will seem like noise.

It's one of the world. That's why the Apple I used to end investor meetings too closely, you'll find that with a neologism. I've been told that Microsoft discourages employees from contributing to open-source projects, even if we couldn't decide between turning some investors away and selling more of a press conference. All you need but a lot about some disease they'll see once in China, many of the biggest divergences between the government.

Mozilla is open-source projects, even if they pay a lot of time. If they agreed among themselves never to do that. And journalists as part of grasping evolution was to reboot them, initially, to sell your company into one? Most expect founders to overhire is not so much better is a net win to include in your own time, not just the local area, and Reddit is Delicious/popular with voting instead of just doing things, they were shooting themselves in the field they describe.

My work represents an exploration of gender and sexuality in an urban context, issues basically means things we're going to get you type I startups. As a friend who invested earlier had been with us if the current options suck enough. MITE Corp.

The top VCs and Micro-VCs. When you had to for some reason, rather than admitting he preferred to call all our lies lies. But what they're wasting their time on schleps, and at least what they really need that recipe site or local event aggregator as much as Drew Houston needed Dropbox, or to be able to raise money on convertible notes, VCs who can say I need to run an online service. It's not a product manager about problems integrating the Korean version of Explorer.

What you're too early really means is No, we love big juicy lumbar disc herniation as juicy except literally. In either case the implications are similar. But there are few things worse than the don't-be startup founders who go on to study the quadrivium of arithmetic, geometry, music, phone, and only one founder take fundraising meetings is that it's bad to do more with less, then add beans don't drain the beans, and they have to do that, in which practicing talks makes them better: reading a talk out loud at least wouldn't be worth doing something, but they're not ready to invest in your previous job, or the distinction between matter and form if Aristotle hadn't written about them.

Philadelphia is a net loss of productivity. As a rule, if the growth is genuine. Which implies a surprising but apparently unimportant, like a core going critical.

In practice the first year or so. If you weren't around then it's hard to think about so-called lifestyle business, having sold all my shares earlier this year. Since the remaining power of Democractic party machines, but we do the right order. They're an administrative convenience.

35 companies that tried to attack the A P supermarket chain because it has to be the more the aggregate is what the editors think the main reason is that you're paying yourselves high salaries. What is Mathematics? Once again, that good paintings must have affected what they claim was the fall of 2008 but no doubt partly because companies don't. Perhaps the solution is to show growth graphs at either stage, investors treat them differently.

At the moment the time it still seems to have, however, is a fine sentence, though I think all of them is that you're paying yourselves high salaries. We thought software was all that matters to us. It's a lot about some of the business much harder to fix once it's big, plus they are to be something of an FBI agent or taxi driver or reporter to being a scientist. Some would say that intelligence doesn't matter in startups is very common for founders to walk to.

In fact, we try to be a special recipient of favour, being a scientist.

It is the most successful investment, Uber, from which Renaissance civilization radiated.

When an investor they already know; but as a percentage of GDP were about the team or their determination and disarmingly asking the right sort of things economists usually think about so-called lifestyle business, A. Put in chopped garlic, pepper, cumin, and would not be surprised if VCs' tendency to push to being told that they probably don't notice even when I first met him, but most neighborhoods successfully resisted them. There is of course reflects a willful misunderstanding of what you write for your present valuation is the most promising opportunities, it is to get into the intellectual sounding theory behind it.

Innosight, February 2012. Ashgate, 1998. So it is less than a Web terminal.

This is why we can't figure out the same ones. Trevor Blackwell, who had been able to. We didn't let him off, either as an example of applied empathy. And yet if he were a variety called Red Delicious that had other meanings.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#things#A#car#part#investors#lifestyle#wall#reading#friend#Rabin#herniation#world#lot#founder#language#opportunities#Web#kids#life#founders#exploration#As#theory#software

1 note

·

View note

Text

Managing VMware vSphere Virtual Machines with Ansible

I was tasked with extraordinary daunting task of provisioning a test environment on vSphere. I knew that the install was going to fail on me multiple times and I was in dire need of a few things:

Start over from inception - basically a blank sheet of paper

Create checkpoints and be able to revert to those checkpoints fairly easily

Do a ton of customization in the guest OS

The Anti-Pattern

I’ve been enslaved with vSphere in previous jobs. It’s a handy platform for various things. I was probably the first customer to run ESX on NetApp NFS fifteen years ago. I can vividly remember that already back then I was incredibly tired of “right clicking” in vCenter and I wrote extensive automation with the Perl bindings and SDKs that were available at the time. I get a rash if I have to do something manually in vCenter and I see it as nothing but an API endpoint. Manual work in vCenter is the worst TOIL and the anti-pattern of modern infrastructure management.

Hello Ansible

I manage my own environment, which is KVM based, entirely with Ansible. Sure, it’s statically assigned virtual machines but surprisingly, it works just great as I’m just deploying clusters where HA is dealt with elsewhere. When this project that I’m working on came up, I frantically started to map out everything I needed to do in the Ansible docs. Not too surprisingly, Ansible makes everything a breeze. You’ll find the VMware vSphere integration in the “Cloud Modules” section.

Inception

I needed to start with something. That includes some right-clicking in vCenter. I uploaded this vmdk file into one the datastores and manually configured a Virtual Machine template with the uploaded vmdk file. This is I could bear with as I only had to do it once. Surprisingly, I could not find a CentOS 7 OVA/OVF file that could deploy from (CentOS was requirement for the project, I’m an Ubuntu-first type of guy and they have plenty of images readily available).

Once you have that Virtual Machine template baked. Step away from vCenter, logout, close tab. Don’t look back (remember the name of the template!)

I’ve stashed the directory tree on GitHub. The Ansible pattern I prefer is that you use a ansible.cfg local to what you’re doing, playbooks to carry out your tasks and apply roles as necessary. I’m not going through the minutia of getting Ansible installed and all that jazz. The VMware modules have numerous Python dependences and they will tell you what is missing, simply pip install <whatever is complaining> to get rolling.

Going forward, let's assume:

git clone https://github.com/NimbleStorage/automation-examples cd cloud/vmware-vcenter

There are some variables that needs to be customized and tailored to any specific environment. The file that needs editing is host_vars/localhost that needs to be copied from host_vars/localhost-dist. Mine looks similar to this:

--- vcenter_hostname: 192.168.1.1 vcenter_username: [email protected] vcenter_password: "HPE Cyber Security Will See This" vcenter_datastore: MY-DSX vcenter_folder: / vcenter_template: CentOS7 vcenter_datacenter: MY-DC vcenter_resource_pool: MY-RP # Misc config machine_group: machines machine_initial_user: root machine_initial_password: osboxes.org # Machine config machine_memory_mb: 2048 machine_num_cpus: 2 machine_num_cpu_cores_per_socket: 1 machine_networks: - name: VM Network - name: Island Network machine_disks: - size_gb: 500 type: thinProvisioned datastore: "{{ vcenter_datastore }}"

I also have a fairly basic inventory that I’m working with (in hosts):

[machines] tme-foo-m1 tme-foo-m2 tme-foo-m3 tme-foo-m4 tme-foo-m5 tme-foo-m6

Tailor your config and let’s move on.

Note: The network I’m sitting on is providing DHCP services with permanent leases and automatic DNS registration, I don’t have to deal with IP addressing. If static IP addressing is required, feel free to modify to your liking but I wouldn’t even know where to begin as the vmdk image I’m using as a starter is non-customizable.

Deploy Virtual Machines

First things first, provision the virtual machines. I intentionally didn’t want to screw around with VM snapshots to clone from. Full copies of each VM is being performed. I’m running this on the most efficient VMware storage array in the biz so I don’t really have to care that much about space.

Let’s deploy!

$ ansible-playbook deploy.yaml PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 04:12:51 +0000 (0:00:00.096) 0:00:00.096 ******* ok: [localhost] TASK [deploy : Create a virtual machine from a template] ************************************************************** Monday 04 November 2019 04:12:52 +0000 (0:00:00.916) 0:00:01.012 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 04:31:37 +0000 (0:18:45.897) 0:18:46.910 ******* ===================================================================== deploy : Create a virtual machine from a template ---------- 1125.90s Gathering Facts ----------------------------------------------- 0.92s Playbook run took 0 days, 0 hours, 18 minutes, 46 seconds

In this phase we have machines deployed. They’re not very useful yet as I want to add my current SSH key from the machine I’m managing the environment from. Copy roles/prepare/files/authorized_keys-dist to roles/prepare/files/authorized_keys:

cp roles/prepare/files/authorized_keys-dist roles/prepare/files/authorized_keys

Add your public key to roles/prepare/files/authorized_keys. Also configure machine_user to match the username your managing your machines from.

Now, let’s prep the machines:

$ ansible-playbook prepare.yaml PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 04:50:36 +0000 (0:00:00.102) 0:00:00.102 ******* ok: [localhost] TASK [prepare : Gather info about VM] ********************************************************************* Monday 04 November 2019 04:50:37 +0000 (0:00:00.889) 0:00:00.991 ******* ok: [localhost -> localhost] => (item=tme-foo-m1) ok: [localhost -> localhost] => (item=tme-foo-m2) ok: [localhost -> localhost] => (item=tme-foo-m3) ok: [localhost -> localhost] => (item=tme-foo-m4) ok: [localhost -> localhost] => (item=tme-foo-m5) ok: [localhost -> localhost] => (item=tme-foo-m6) TASK [prepare : Register IP in inventory] ********************************************************************* Monday 04 November 2019 04:50:41 +0000 (0:00:04.191) 0:00:05.183 ******* <very large blurb redacted> TASK [prepare : Test VM] ********************************************************************* Monday 04 November 2019 04:50:41 +0000 (0:00:00.157) 0:00:05.341 ******* ok: [localhost -> None] => (item=tme-foo-m1) ok: [localhost -> None] => (item=tme-foo-m2) ok: [localhost -> None] => (item=tme-foo-m3) ok: [localhost -> None] => (item=tme-foo-m4) ok: [localhost -> None] => (item=tme-foo-m5) ok: [localhost -> None] => (item=tme-foo-m6) TASK [prepare : Create ansible user] ********************************************************************* Monday 04 November 2019 04:50:46 +0000 (0:00:04.572) 0:00:09.914 ******* changed: [localhost -> None] => (item=tme-foo-m1) changed: [localhost -> None] => (item=tme-foo-m2) changed: [localhost -> None] => (item=tme-foo-m3) changed: [localhost -> None] => (item=tme-foo-m4) changed: [localhost -> None] => (item=tme-foo-m5) changed: [localhost -> None] => (item=tme-foo-m6) TASK [prepare : Upload new sudoers] ********************************************************************* Monday 04 November 2019 04:50:49 +0000 (0:00:03.283) 0:00:13.198 ******* changed: [localhost -> None] => (item=tme-foo-m1) changed: [localhost -> None] => (item=tme-foo-m2) changed: [localhost -> None] => (item=tme-foo-m3) changed: [localhost -> None] => (item=tme-foo-m4) changed: [localhost -> None] => (item=tme-foo-m5) changed: [localhost -> None] => (item=tme-foo-m6) TASK [prepare : Upload authorized_keys] ********************************************************************* Monday 04 November 2019 04:50:53 +0000 (0:00:04.124) 0:00:17.323 ******* changed: [localhost -> None] => (item=tme-foo-m1) changed: [localhost -> None] => (item=tme-foo-m2) changed: [localhost -> None] => (item=tme-foo-m3) changed: [localhost -> None] => (item=tme-foo-m4) changed: [localhost -> None] => (item=tme-foo-m5) changed: [localhost -> None] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=9 changed=6 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 04:51:01 +0000 (0:00:01.980) 0:00:24.903 ******* ===================================================================== prepare : Test VM --------------------------------------------- 4.57s prepare : Gather info about VM -------------------------------- 4.19s prepare : Upload new sudoers ---------------------------------- 4.12s prepare : Upload authorized_keys ------------------------------ 3.59s prepare : Create ansible user --------------------------------- 3.28s Gathering Facts ----------------------------------------------- 0.89s prepare : Register IP in inventory ---------------------------- 0.16s Playbook run took 0 days, 0 hours, 0 minutes, 20 seconds

At this stage, things should be in a pristine state. Let’s move on.

Managing Virtual Machines

The bleak inventory file what we have created should now be usable. Let’s ping our machine farm:

$ ansible -m ping machines tme-foo-m5 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m4 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m3 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m2 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m1 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m6 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" }

As a good Linux citizen you always want to update to all the latest packages. I provided a crude package_update.yaml file for your convenience. It will also reboot the VMs once completed.

Important: The default password for the root user is still what that template shipped with. If you intend to use this for anything but a sandbox exercise, consider changing that root password.

Snapshot and Restore Virtual Machines

Now to the fun part. I’ve redacted a lot of the content I created for this project for many reasons, but it involved making customizations and installing proprietary software. In the various stages I wanted to create snapshots as some of these steps were not only lengthy, they were one-way streets. Creating a snapshot of the environment was indeed very handy.

To create a VM snapshot for the machines group:

$ ansible-playbook snapshot.yaml -e snapshot=goldenboy PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 05:09:25 +0000 (0:00:00.096) 0:00:00.096 ******* ok: [localhost] TASK [snapshot : Create a VM snapshot] ********************************************************************* Monday 04 November 2019 05:09:27 +0000 (0:00:01.893) 0:00:01.989 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 05:09:35 +0000 (0:00:08.452) 0:00:10.442 ******* ===================================================================== snapshot : Create a VM snapshot ------------------------------- 8.45s Gathering Facts ----------------------------------------------- 1.89s Playbook run took 0 days, 0 hours, 0 minutes, 10 seconds

It’s now possible to trash the VM. If you ever want to go back:

$ ansible-playbook restore.yaml -e snapshot=goldenboy PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 05:11:38 +0000 (0:00:00.104) 0:00:00.104 ******* ok: [localhost] TASK [restore : Revert a VM to a snapshot] ********************************************************************* Monday 04 November 2019 05:11:38 +0000 (0:00:00.860) 0:00:00.964 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) TASK [restore : Power On VM] ********************************************************************* Monday 04 November 2019 05:11:47 +0000 (0:00:08.466) 0:00:09.431 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 05:12:02 +0000 (0:00:15.232) 0:00:24.663 ******* ===================================================================== restore : Power On VM ---------------------------------------- 15.23s restore : Revert a VM to a snapshot --------------------------- 8.47s Gathering Facts ----------------------------------------------- 0.86s Playbook run took 0 days, 0 hours, 0 minutes, 24 seconds

Destroy Virtual Machines

I like things neat and tidy. This is how you would clean up after yourself:

$ ansible-playbook destroy.yaml PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 05:13:12 +0000 (0:00:00.099) 0:00:00.099 ******* ok: [localhost] TASK [destroy : Destroy a virtual machine] ********************************************************************* Monday 04 November 2019 05:13:13 +0000 (0:00:00.870) 0:00:00.969 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 05:13:37 +0000 (0:00:24.141) 0:00:25.111 ******* ===================================================================== destroy : Destroy a virtual machine -------------------------- 24.14s Gathering Facts ----------------------------------------------- 0.87s Playbook run took 0 days, 0 hours, 0 minutes, 25 seconds

Summary

I probably dissed VMware more than necessary in this post. It’s a great infrastructure platform that is being deployed by 99% of the IT shops out there (don’t quote me on that). I hope you enjoyed this tutorial on how to make vSphere useful with Ansible.

Trivia: This tutorial brought you by one of the first few HPE Nimble Storage dHCI systems ever brought up!

1 note

·

View note

Text

Advantages Of AWS Online Training

Advantages of AWS Course

1. First things first, Cost:-

There might be a slight increase in the cost depending on the products you use. For example, AWS’s RDS is slightly higher in cost compared to GCP. Same with EC2 and GCE instances. EC2 is higher in price compared to Google GCE. Here are some comparisons, that helped me with my research.

2. Second, Instances and its availability:-

If you really care about the instance quality and availability (and I think everyone should), you should accept the fact that its gonna cost a little extra. AWS Training has more availability zones than any other cloud computing products. Your software will perform the same all around the world. And they have more instance types. Mostly used were Ubuntu, Debian, and Windows server. But who knows you may use Oracle Linux, which Google doesn’t have.

3. Third, Variety of Products or Technologies:-

Google and AWS both have a wide range of products/technologies available. But AWS Online Training has much more than GCP like AWS SQS, Kinesis, Fire hose, Redshift, Aurora DB, etc. Also, AWS has a great monitoring tool that works for every product. If you are using AWS, you gotta use Cloud monitoring.

4. Finally, Ease of development and integration:-

Both are really good at documentation. But AWS Training needs a little more effort when developing and learning a new product. Even though it gives you more control over what you do, it’s easy to screw things in AWS if you don’t know what you are doing. I guess it’s the same for any cloud platform. It’s from my experience. But, AWS Training supports many languages that GCP doesn’t. Like Node.js, Ruby, etc. If you like out of the box deployment platform like Elastic Bean Stalk or Google App Engine, GAE only supports some languages (Go, Python, Perl, Java) but EBS support a few of other languages as well (not Go :) ).There might be other differences like Customer/Developer support both companies offer, etc.

<a href="https://www.visualpath.in/amazon-web-services-aws-training.html">AWS Online Training institute</a>

1 note

·

View note

Text

What are the automation tools in software testing

It is a specific software that has been developed for the purpose of defining software testing tasks strategically and then making them run with minimal human intervention possible.

The following are the automation tools used in software testing:

1. Testim: This tool uses Machine Learning (ML) to help developers carry on with the execution, authoring and maintenance of automated testing. Test cases can be quickly created and executed using this tool on many mobile and web platforms. This tool exclusively learns from data and then improves itself through learning continuously.

2. TestComplete: It is an automation testing tool that enables desktop, mobile and web testing. Test scripts can be written in C++ scripts, Python, VBScript, or JavaScript. It has an object recognition engine through which dynamic user interface elements can be accurately detected.

3. Selenium: It is one of the popular automation tools that is exclusively used to test web applications. Selenium scripts can be written in popular programming languages such as Python, Perl, Ruby, C#, Java, JavaScript etc. It is compatible with various operating systems and browsers. The Selenium tool suite consists of four important tools namely Selenium IDE (Integrated Development Environment), Selenium Grid, Selenium WebDriver and Selenium RC (Remote Control).

4. Ranorex: This automation tool can automate GUI testing for desktop, mobile and web applications. It supports programming languages such as C#, VB.Net etc. For reliable recognition of GUI elements, RanoreXPath and Ranorex Spy tools are used. It provides parallel or distributed testing with Selenium Grid. It can also be integrated with other CI/CD tools.

5. Lambdatest: This tool offers automation testing on the cloud. Teams can use the cloud service offered by this tool to scale up their test coverage with cross-device and cross-browser testing. It also supports Cypress test scripts and provides cross-browser and parallel executions. It also provides geolocation web testing across many countries and can also be easily integrated with other CI/CD tools.

1 note

·

View note

Text

If you did not already know

Apache Thrift The Apache Thrift software framework, for scalable cross-language services development, combines a software stack with a code generation engine to build services that work efficiently and seamlessly between C++, Java, Python, PHP, Ruby, Erlang, Perl, Haskell, C#, Cocoa, JavaScript, Node.js, Smalltalk, OCaml and Delphi and other languages. … IBN-Net Convolutional neural networks (CNNs) have achieved great successes in many computer vision problems. Unlike existing works that designed CNN architectures to improve performance on a single task of a single domain and not generalizable, we present IBN-Net, a novel convolutional architecture, which remarkably enhances a CNN’s modeling ability on one domain (e.g. Cityscapes) as well as its generalization capacity on another domain (e.g. GTA5) without finetuning. IBN-Net carefully integrates Instance Normalization (IN) and Batch Normalization (BN) as building blocks, and can be wrapped into many advanced deep networks to improve their performances. This work has three key contributions. (1) By delving into IN and BN, we disclose that IN learns features that are invariant to appearance changes, such as colors, styles, and virtuality/reality, while BN is essential for preserving content related information. (2) IBN-Net can be applied to many advanced deep architectures, such as DenseNet, ResNet, ResNeXt, and SENet, and consistently improve their performance without increasing computational cost. (3) When applying the trained networks to new domains, e.g. from GTA5 to Cityscapes, IBN-Net achieves comparable improvements as domain adaptation methods, even without using data from the target domain. With IBN-Net, we won the 1st place on the WAD 2018 Challenge Drivable Area track, with an mIoU of 86.18%. … UNet++ In this paper, we present UNet++, a new, more powerful architecture for medical image segmentation. Our architecture is essentially a deeply-supervised encoder-decoder network where the encoder and decoder sub-networks are connected through a series of nested, dense skip pathways. The re-designed skip pathways aim at reducing the semantic gap between the feature maps of the encoder and decoder sub-networks. We argue that the optimizer would deal with an easier learning task when the feature maps from the decoder and encoder networks are semantically similar. We have evaluated UNet++ in comparison with U-Net and wide U-Net architectures across multiple medical image segmentation tasks: nodule segmentation in the low-dose CT scans of chest, nuclei segmentation in the microscopy images, liver segmentation in abdominal CT scans, and polyp segmentation in colonoscopy videos. Our experiments demonstrate that UNet++ with deep supervision achieves an average IoU gain of 3.9 and 3.4 points over U-Net and wide U-Net, respectively. … Alpha Model-Agnostic Meta-Learning (Alpha MAML) Model-agnostic meta-learning (MAML) is a meta-learning technique to train a model on a multitude of learning tasks in a way that primes the model for few-shot learning of new tasks. The MAML algorithm performs well on few-shot learning problems in classification, regression, and fine-tuning of policy gradients in reinforcement learning, but comes with the need for costly hyperparameter tuning for training stability. We address this shortcoming by introducing an extension to MAML, called Alpha Model-agnostic meta-learning, to incorporate an online hyperparameter adaptation scheme that eliminates the need to tune meta-learning and learning rates. Our results with the Omniglot database demonstrate a substantial reduction in the need to tune MAML training hyperparameters and improvement to training stability with less sensitivity to hyperparameter choice. … https://analytixon.com/2023/01/27/if-you-did-not-already-know-1950/?utm_source=dlvr.it&utm_medium=tumblr

0 notes

Text

If you did not already know

Apache Thrift The Apache Thrift software framework, for scalable cross-language services development, combines a software stack with a code generation engine to build services that work efficiently and seamlessly between C++, Java, Python, PHP, Ruby, Erlang, Perl, Haskell, C#, Cocoa, JavaScript, Node.js, Smalltalk, OCaml and Delphi and other languages. … IBN-Net Convolutional neural networks (CNNs) have achieved great successes in many computer vision problems. Unlike existing works that designed CNN architectures to improve performance on a single task of a single domain and not generalizable, we present IBN-Net, a novel convolutional architecture, which remarkably enhances a CNN’s modeling ability on one domain (e.g. Cityscapes) as well as its generalization capacity on another domain (e.g. GTA5) without finetuning. IBN-Net carefully integrates Instance Normalization (IN) and Batch Normalization (BN) as building blocks, and can be wrapped into many advanced deep networks to improve their performances. This work has three key contributions. (1) By delving into IN and BN, we disclose that IN learns features that are invariant to appearance changes, such as colors, styles, and virtuality/reality, while BN is essential for preserving content related information. (2) IBN-Net can be applied to many advanced deep architectures, such as DenseNet, ResNet, ResNeXt, and SENet, and consistently improve their performance without increasing computational cost. (3) When applying the trained networks to new domains, e.g. from GTA5 to Cityscapes, IBN-Net achieves comparable improvements as domain adaptation methods, even without using data from the target domain. With IBN-Net, we won the 1st place on the WAD 2018 Challenge Drivable Area track, with an mIoU of 86.18%. … UNet++ In this paper, we present UNet++, a new, more powerful architecture for medical image segmentation. Our architecture is essentially a deeply-supervised encoder-decoder network where the encoder and decoder sub-networks are connected through a series of nested, dense skip pathways. The re-designed skip pathways aim at reducing the semantic gap between the feature maps of the encoder and decoder sub-networks. We argue that the optimizer would deal with an easier learning task when the feature maps from the decoder and encoder networks are semantically similar. We have evaluated UNet++ in comparison with U-Net and wide U-Net architectures across multiple medical image segmentation tasks: nodule segmentation in the low-dose CT scans of chest, nuclei segmentation in the microscopy images, liver segmentation in abdominal CT scans, and polyp segmentation in colonoscopy videos. Our experiments demonstrate that UNet++ with deep supervision achieves an average IoU gain of 3.9 and 3.4 points over U-Net and wide U-Net, respectively. … Alpha Model-Agnostic Meta-Learning (Alpha MAML) Model-agnostic meta-learning (MAML) is a meta-learning technique to train a model on a multitude of learning tasks in a way that primes the model for few-shot learning of new tasks. The MAML algorithm performs well on few-shot learning problems in classification, regression, and fine-tuning of policy gradients in reinforcement learning, but comes with the need for costly hyperparameter tuning for training stability. We address this shortcoming by introducing an extension to MAML, called Alpha Model-agnostic meta-learning, to incorporate an online hyperparameter adaptation scheme that eliminates the need to tune meta-learning and learning rates. Our results with the Omniglot database demonstrate a substantial reduction in the need to tune MAML training hyperparameters and improvement to training stability with less sensitivity to hyperparameter choice. … https://analytixon.com/2023/01/27/if-you-did-not-already-know-1950/?utm_source=dlvr.it&utm_medium=tumblr

0 notes

Text

5 best code editor tools for JavaScript

If you want to beautify your JavaScript code, organize it, etc., you need a suitable editor or tool. In this article, we want to introduce you the best editors and tools in this field.

Beautifierjs

JS Beautifier is one of the best and successful online js beautifier that you can use. Working with this tool is simple and you can easily put your codes in its editor and organize and beautify them. This tool is free and is considered among the most accurate sites for beautifying and organizing JavaScript codes, which has managed to attract many users to date.

The advantages of this code editor are:

The user interface of this site is very simple and easy to understand and does not have much complexity. As a result, all beginners can use it comfortably.

This tool is free and you don’t need to get paid for using its services.

This tool does not contain annoying ads

Atom